Tokenization

How Cards Are Turning Invisible To Advance Seamless Payments, Improve Security & Reduce Fraud & Chargebacks

While the industry continues to focus on how Agentic Commerce will impact it, some are already thinking about other aspects that will have to be solved before we can make that leap.

One of those things is how we deal with authentication, especially as people won’t necessarily be the ones holding on to the PAN.

Therefore, a lot of my conversations have been about how Tokenization is going to play a crucial role in the coming years.

Mastercard has already committed to 100% e-commerce tokenization in Europe by 2030. Visa isn’t far behind, as it has already tokenized 50% of all digital transactions globally.

The 16-digit card number is on its way out.

This isn’t another incremental security upgrade. It’s the most significant transformation in payment infrastructure since EMV chips replaced magnetic stripes. And unlike that transition, which took nearly two decades, this one’s moving fast.

The numbers tell the story.

$20 billion is lost to credit card fraud annually.

71% cart abandonment rates in e-commerce.

Authorization rates are hovering around 85% when they should be higher.

Tokenization addresses all three.

Let me explain what’s actually happening, why 2030 matters, and what it means for payments companies across the world.

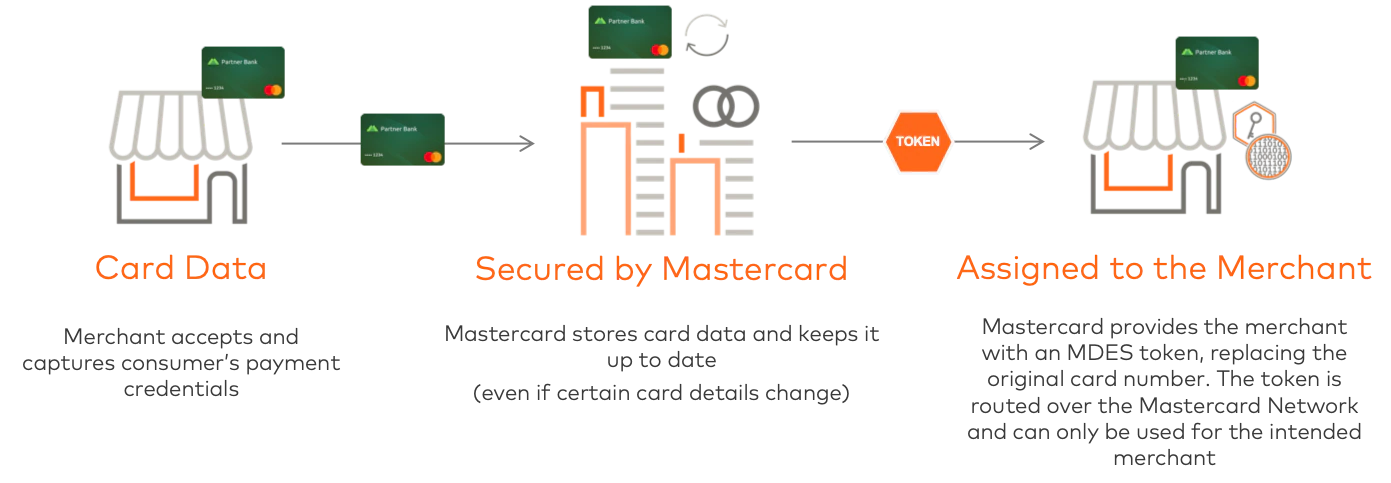

What Tokenization Actually Does

Strip away the technical layers, and tokenization does one thing: it replaces your card number with something that can’t be used if stolen.

When you add a card to Apple Pay, you’re not storing your actual card number on your phone. You’re storing a token, a substitute number that only works in specific contexts. That token’s useless to anyone who steals your phone data. It won’t work at other merchants. It expires. It can be revoked remotely.

Network tokenization takes this concept and applies it everywhere.

Visa calls it VTS, Visa Token Service. Mastercard uses MDES, Mastercard Digital Enablement Service. American Express, Discover, JCB, and even UnionPay have their own versions.

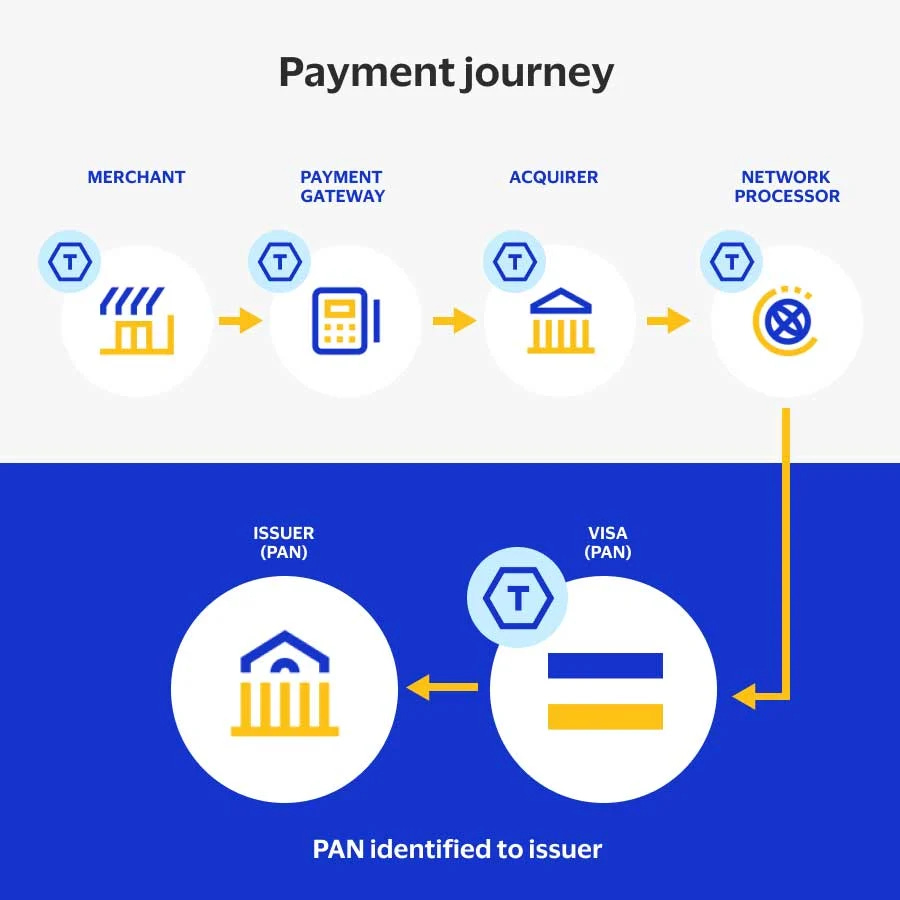

The token sits in place of your card number throughout the payment chain. The merchant never sees your real credentials. The payment processor handles tokens. Even the data stored on file for subscriptions uses tokens instead of raw PANs, primary account numbers.

Here’s what makes network tokens different from the merchant tokens you might already use.

Network tokens update automatically when your card expires or gets reissued. They include additional authentication data that boosts authorization rates. And they’re portable across processors if implemented correctly.

The issuer controls the token through the card network. They can see each transaction, even though it’s going through a token. They know where it’s being used, by whom, and can shut it down instantly if something looks wrong.

That’s the technical foundation. Now, let’s look at why this became urgent enough for Mastercard to set a deadline.

The Breaking Point That Made 2030 Inevitable

Tokenization isn’t new. Visa launched VTS in 2014. Mastercard introduced MDES the same year. Apple Pay popularized the concept for consumers.

But adoption stayed lumpy. Merchants saw the infrastructure cost and delay. Payment service providers built halfway solutions. Issuers tokenized mobile wallets but left e-commerce on legacy rails.

Then three things converged.

First, fraud got worse and more expensive. E-commerce fraud losses hit $48 billion globally in 2024. Account takeover attacks doubled. Card testing schemes automated by bots became trivial to execute. The old model, where merchants stored card numbers, became indefensible.

Second, regulations tightened. PSD2 in Europe forced strong customer authentication. India’s RBI mandated tokenization for card-on-file transactions, reaching near 100% compliance. Other markets watched and prepared similar rules.

Third, AI agents arrived. You can’t give an AI your card number and let it shop autonomously. The security model breaks entirely. Tokenization with mandate controls became the only viable path forward.

Mastercard saw this convergence and made the call: 100% European e-commerce tokenization by 2030.

Not a goal. A commitment. They’re eliminating manual card entry and passwords entirely through a combination of tokenization, Click to Pay, and biometric authentication they call Payment Passkeys.

Visa’s taking a different path.

No explicit deadline, but they’re scaling aggressively. 12.6 billion network tokens provisioned. 50% of digital transactions. 44% year-over-year growth. They’re betting speed beats deadlines.

Both approaches lead to the same place. By 2030, manually typing a 16-digit card number into a checkout form will feel as outdated as pulling out a paper checkbook.

The Measurable Impact No One’s Talking About Loudly Enough

So why is this important for payment companies and merchants?

Fraud reduction isn’t marginal.

It’s 30% to 40% lower with network tokens versus raw card numbers.

Visa’s data shows tokenized CNP transactions run at 40% lower fraud rates. For a merchant processing $100 million annually with a 0.5% fraud rate, tokenization saves $150,000 to $200,000 in direct losses.

Authorization rate improvements matter more than fraud savings for most businesses. Mastercard reports 3 to 6 percentage point increases. Visa sees 4.6% lifts globally. American Express shows 2.75% average improvements.

Let’s do the math on a subscription business.

A 5% authorization lift on $50 million in recurring revenue is $2.5 million in recovered transactions. That’s money you were already owed but couldn’t collect because the payment failed.

Conversion rates tell the retention story.

Click to Pay implementations, which use tokenization as the security layer, show up to 35% increases in checkout completion. Even conservative estimates put the improvement at 10% to 15%. For high-value products with 60% cart abandonment, that’s transformative.

The operational benefits compound over time.

Automatic credential updates eliminate the involuntary churn when cards expire. Subscription businesses lose 9% of revenue to failed payments. Network tokenization fixes roughly half of those failures without any customer action required.

India offers real-world proof at scale.

After the RBI mandate, the market moved to near 100% e-commerce tokenization in 18 months. Fraud rates dropped. Authorization rates climbed. Merchants who complained about implementation costs saw ROI within quarters.

That’s what 2030 looks like when you reverse-engineer from market data instead of press releases.

How Tokenization Changes the Payment Experience Completely

The consumer-facing changes are obvious. Faster checkouts. No more pulling out your wallet to type in numbers. Biometric authentication instead of passwords. One-click everything.

The backend transformation matters more for payments companies.

If merchants stop storing card numbers entirely, your PCI compliance scope shrinks dramatically, in some cases by 90%. Security audits get simpler. Breach liability drops because there’s nothing valuable to steal. A hacker who grabs your token database gets useless data.

Payment service providers gain new leverage points.

If you support network tokenization well, you’re providing measurable value in authorization rates and fraud reduction. If you don’t, merchants will switch to providers who do. The PSPs that built robust tokenization platforms three years ago are winning deals today.

Issuers get unprecedented visibility and control. They see every transaction attempt, even though it’s flowing through a token they manage. They can apply risk models at the network level. They can shut down suspicious patterns instantly. And they reduce their own fraud losses substantially.

Acquirers face infrastructure investments but gain processing efficiency.

Token transactions include cryptograms and additional authentication data that make fraud detection more accurate. False declines drop. Legitimate transactions flow through faster.

The shift also changes competitive dynamics.

Visa’s scale advantage becomes more valuable when network effects matter more. Mastercard’s aggressive timeline forces the market to move faster than it otherwise would. Smaller networks like Discover and JCB have to decide whether to compete on features or interoperability.

And then there’s the latest development, agentic commerce. Let’s dive into why tokenization is necessary.

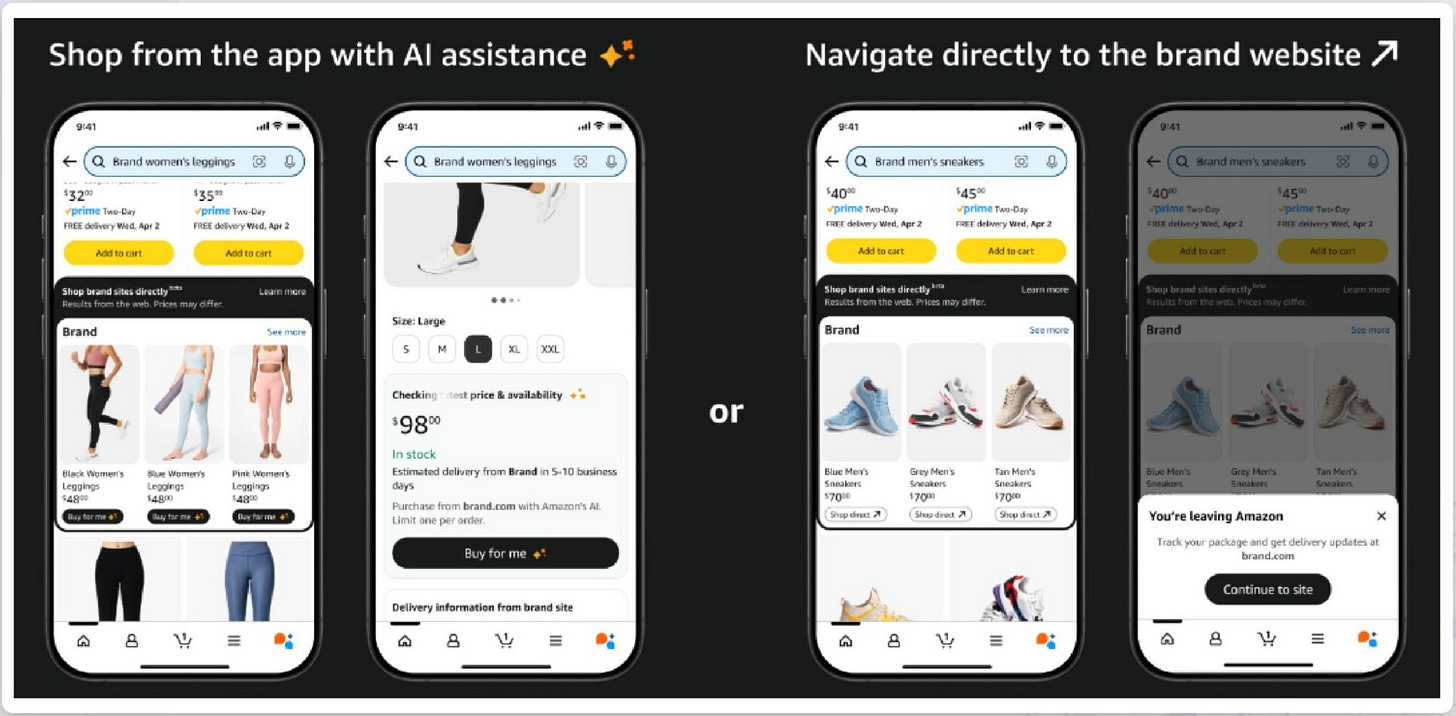

AI Agents Need Tokenization To Function Safely

The simplest answer?

You can’t hand an AI agent your credit card number and tell it to go shopping. The security model would immediately collapse.

Tokenization with mandate controls solves this.

Visa is building what they call AI-Ready Cards, tokenized credentials that confirm an agent is authorized to act. Mastercard is developing Agentic Tokens, programmable payment credentials that activate only under specific conditions.

Last week, we learned about Google’s Agent Payments Protocol, AP2, which aims to establish the technical standard. Their protocol uses a three-mandate architecture: Intent Mandates for pre-authorized conditions, Cart Mandates for final verification, and Payment Mandates for network authorization. It is still to early to see which one will become the standard.

However, real implementations already exist.

Amazon’s “Buy for Me” feature lets AI complete purchases autonomously. OpenAI’s Operator navigates websites and buys items through chat interfaces. Perplexity’s “Buy with Pro” enables purchasing directly from search results.

These systems only work because of tokenization. The agent never sees your card number. It gets a temporary, limited-use token with spending constraints. If the agent’s compromised or misbehaves, you revoke the token without canceling your card.

The mandate layer adds programmable controls. You can set spending limits, restrict merchant categories, require approval for purchases over certain amounts, or limit the agent to specific time windows. Smart contracts enforce these rules automatically.

This matters for payments companies because agentic commerce is growing fast.

Visa’s already working with Anthropic, IBM, Microsoft, OpenAI, and Perplexy. Mastercard’s integrated with Microsoft Azure OpenAI Service and IBM WatsonX Orchestrate. These aren’t just pilot programs; they are already in production.

By 2030, a meaningful percentage of e-commerce transactions will originate from AI agents, not humans clicking buttons. This will all be possible because of Tokenization.

What Digital Wallets and Virtual Cards Already Prove

We don’t need to theorize about tokenization’s impact. Digital wallets and virtual cards have already shown what happens at scale.

Apple Pay processes over $686 billion annually across 100+ million US users.

Every transaction uses network tokenization. The fraud rate is 90% lower than traditional card-present transactions. Authorization rates run higher than manual entry. Merchants that accept Apple Pay see measurable conversion improvements.

Google Pay, Samsung Pay, and other wallets follow the same pattern.

Token-based transactions perform better on every metric that matters. Consumers prefer them. Issuers see less fraud. Merchants get higher approval rates.

Virtual cards demonstrate even more dramatic fraud resistance. They’re seven times less susceptible to fraud than check payments. Transaction volumes grew from $1.6 trillion in 2020 to a projected $5 trillion by 2025. The security model works because each virtual card is essentially a controlled, single-use, or merchant-specific token.

Click to Pay, the networks’ answer to PayPal and Apple Pay for browser-based checkout, shows how tokenization enables frictionless experiences. It’s live at 10,000+ retailers with 125% year-over-year growth. Visa reports a 4.3% authorization rate lift. Mastercard says 94% of US Mastercard consumer e-commerce spend is eligible.

The card-on-file use case matters most for subscription businesses.

Tokenization with automatic updates eliminates the primary cause of involuntary churn. When a customer’s card expires, the token updates automatically across all merchants who have it on file. No “payment method failed” emails. No lost subscribers because they didn’t update their card.

Marqeta, Stripe, and other modern processors built their platforms on tokenization from day one. They’re not retrofitting legacy systems. That architectural choice gives them advantages in speed, security, and feature development that older processors struggle to match.

The pattern’s clear: tokenization isn’t coming. It’s already here in every high-performance payment channel.

Implementation Barriers Are Real But Surmountable

Now that it is clear that Tokenization isn’t going anywhere, and will be part of our future, let’s look at what’s actually hard about tokenization because ignoring implementation challenges doesn’t help anyone.

Vendor lock-in tops the list.

Not all payment processors support network tokenization equally. Some only support specific networks. Others charge premium fees for token provisioning. Switching processors can mean re-tokenizing your entire stored credential base, which creates massive operational overhead.

Legacy system integration causes real pain.

You’re running parallel systems during transition. You need to handle returns and chargebacks on transactions that originated with raw PANs. Your reporting tools need to work with both token and non-token transactions. Your reconciliation processes get more complex before they get simpler.

The skills gap compounds technical challenges.

DLT-based workflows, smart contract integration, and blockchain operations require different expertise than traditional payment processing. Your team needs training. Your vendors need compatible capabilities. The industry is short on people who understand both legacy payment rails and modern tokenization infrastructure.

Regional regulatory differences create compliance complexity.

The US maintains fragmented approaches across multiple agencies. Europe provides comprehensive frameworks, but adds GDPR requirements. Asia-Pacific regions offer varying CBDC integration approaches. Cross-border transactions require compliance with multiple frameworks simultaneously.

Cost comes up in every merchant conversation.

Infrastructure investment isn’t trivial. You’re integrating new APIs, potentially upgrading point-of-sale systems, retraining staff, and running dual systems during migration. Small and mid-sized merchants struggle to justify the ROI when their current setup works.

Here’s the uncomfortable truth: these barriers are real, but they’re not reasons to delay.

India proved that mandate-driven adoption works. The market moved to near-universal tokenization in 18 months once the RBI required it. Europe will follow a similar path by 2030.

The merchants and PSPs who move early capture the benefits while everyone else is still planning. The ones who wait until regulation forces their hand will pay more and gain less competitive advantage.

Strategic Framework for PSPs and Acquirers

If you’re a payment service provider or acquirer, your tokenization strategy determines your competitive position for the next decade.

Start with an infrastructure assessment.

Do you support Visa Token Service and Mastercard MDES natively, or are you relying on partners? Can you provision tokens in real-time, or is there batch processing delay? Do your tokens work across multiple processors, or are they locked to your infrastructure?

Invest in vault-less architecture if you’re building new systems.

Basis Theory, VGS, and similar providers offer PCI Level 1 compliant tokenization without forcing merchants into processor lock-in. This flexibility becomes valuable as merchants increasingly demand processor-agnostic solutions.

Build credential updating automation.

Automatic credential updates are a killer feature for subscription businesses. If you can’t offer this, you’re losing deals to providers who can. The technical lift is manageable. The business value is substantial.

Develop agentic commerce capabilities now, not later.

Partner with AI platforms. Build mandate management systems. Create sandbox environments for testing agent-initiated transactions. By 2027, merchants will expect this. By 2030, it’ll be the standard.

Price tokenization services strategically.

Some PSPs charge premium fees for token provisioning and management. Others bundle it into standard processing. The merchants who benefit most from tokenization, high-volume subscription businesses, and digital goods sellers are also the most price-sensitive. Figure out your pricing model before competition forces a race to the bottom.

Focus on authorization rate improvements in your sales process.

That’s the metric that matters most to merchants. Show concrete data on lift percentages. Offer A/B testing to prove the value. Make authorization rate improvement your primary differentiation point.

For acquirers specifically, tokenization changes fraud liability models. Work with your underwriting teams to adjust risk assessments for tokenized versus non-tokenized merchants. The fraud rates diverge significantly. Your pricing should reflect that.

Strategic Framework for Merchants

Merchants face different decisions, but they are equally important.

Prioritize Click to Pay integration if you’re in e-commerce.

It’s the fastest path to tokenization benefits without building custom infrastructure. It’s supported by all major networks. And it delivers measurable conversion improvements. The ROI case is straightforward.

Evaluate your PSP’s tokenization capabilities objectively.

Ask specific questions: Do they support network tokens from all major networks? Can they provision tokens in real-time? Do they handle automatic credential updates? What’s their token portability if you switch processors? Don’t accept vague reassurances. Get technical specifications.

Implement tokenization for card-on-file credentials first.

This is your highest-value use case. Automatic updates eliminate involuntary churn. Improved authorization rates recover revenue you’re currently losing. The implementation is simpler than full checkout tokenization. And the payback period is measured in months.

Plan for agentic commerce interfaces even if you’re not building them yet.

Think about how AI agents would interact with your checkout flow.

What information would they need?

What guardrails would you require?

How would returns and disputes work?

These questions will become practical very soon.

For physical retail, tokenization through mobile wallets is already standard. Your focus should be on ensuring consistent acceptance across all payment types and training staff to encourage wallet adoption. The transaction quality improvements more than justify any incremental acceptance costs.

Subscription businesses should make tokenization their top infrastructure priority.

You’re losing 9% of revenue to failed payments. Network tokenization with automatic updates recovers roughly half of that. On $10 million in recurring revenue, that’s $450,000. The implementation typically costs a fraction of the annual benefit.

Monitor your authorization rates by payment method religiously.

Tokenized transactions should show measurably higher approval rates. If they don’t, something’s wrong with your implementation. Work with your PSP to diagnose the issue. Authorization rate differences of 3% to 6% between token and non-token transactions should be standard.

Don’t wait for regulation to force your hand.

The merchants implementing tokenization now are seeing benefits while their competitors are still planning. By 2028, when regulatory pressure intensifies, you want to be optimizing an existing system, not scrambling to deploy a new one.

The 2030 Convergence Point

Three forces converge by 2030: universal tokenization, biometric authentication, and AI agent commerce.

Mastercard eliminates manual card entry and passwords entirely in Europe, then expands globally. Visa reaches similar coverage without the explicit deadline, driven by market adoption rather than mandate. The other networks follow because they have to.

Consumers stop typing card numbers.

They authenticate with fingerprints, face scans, or passkeys stored in password managers. The checkout flow compresses to seconds. Cart abandonment rates drop. Authorization rates climb above 90% for the first time.

AI agents handle routine purchases automatically under pre-approved mandates. Your smart fridge orders groceries. Your calendar books travel. Your email assistant pays invoices. Each transaction uses tokenized credentials with programmed spending limits and merchant restrictions.

The infrastructure that makes this possible is tokenization. Without it, the security model fails. With it, the entire digital commerce experience transforms.

Payment companies that understand this transition, who’ve built the infrastructure, who’ve captured the data on fraud reduction and authorization improvements, will be positioned to capture outsized value in a trillion-dollar market.

The ones who dismissed tokenization as incremental or waited for others to move first will be explaining why their fraud rates run higher and their authorization rates lag behind competitors who moved sooner.

The reality is that 2030 isn’t another distant deadline. It’s just 1,500 days away.

The transformation’s already started. The only question is whether you’re building the infrastructure or reacting to what others have built.

Thank You for Reading. Please like, Comment, Share, or Post on Your Social media. I appreciate all the feedback I can get.

P.S. If you’re interested in collaborating with me on a larger scale, whether through speaking, advisory services, or consulting, please don’t hesitate to email or DM me.